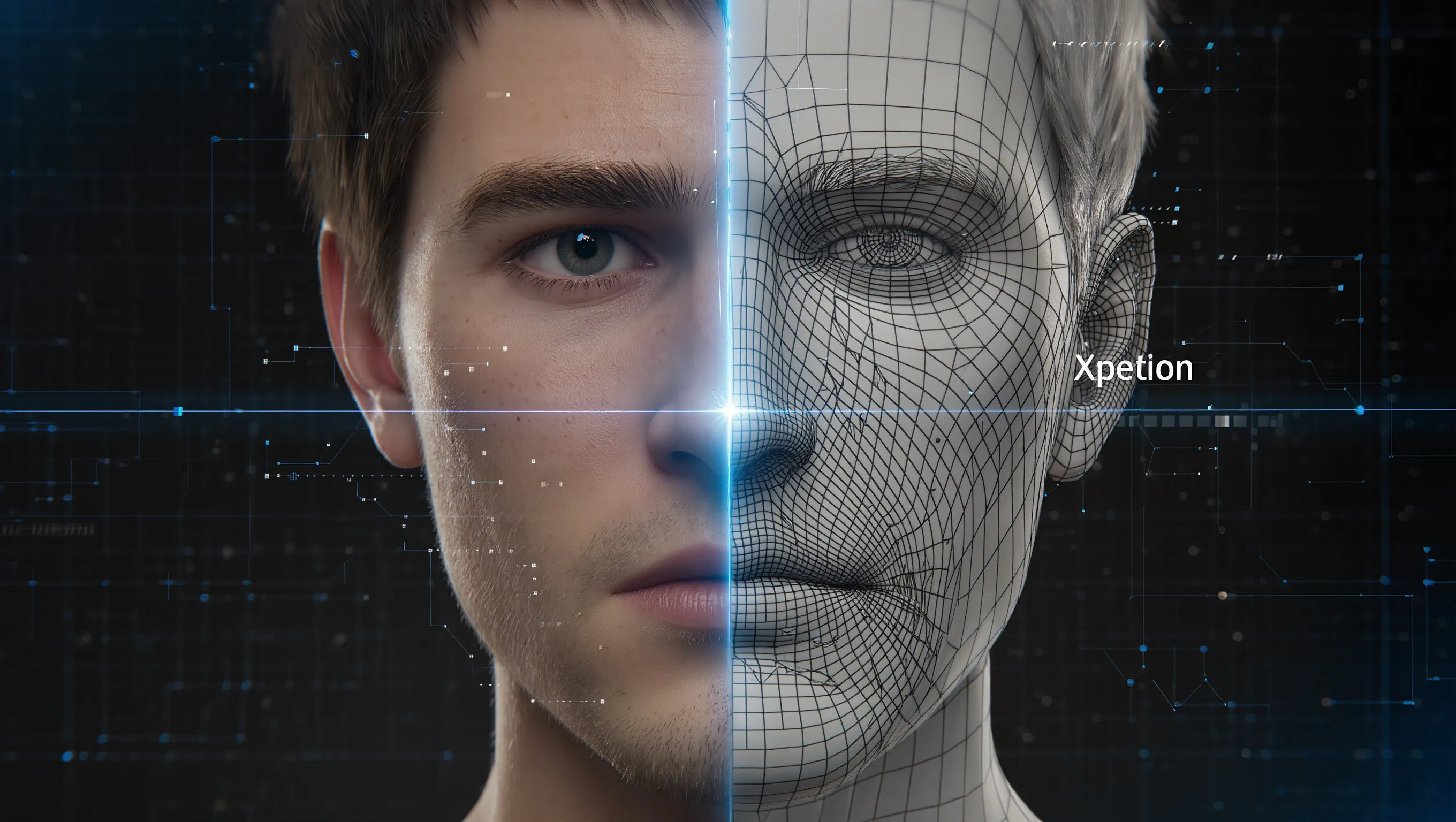

Dagestan Researchers Develop Neural Network System to Detect Deepfakes

Scientists at Dagestanskiy gosudarstvennyy universitet – DGU (Dagestan State University) have introduced a neural network-based system designed to identify deepfakes. The team reports that the Xception model achieved what they describe as an optimal balance between detection accuracy at 88.5 percent and processing speed at 89 milliseconds per frame.

Deepfakes as a Security Threat

The project addresses a rapidly evolving threat landscape. Deepfakes are highly realistic media files generated using generative adversarial networks, or GANs, to replicate a person’s appearance and speech. Such content can mislead audiences and has been exploited by fraudsters to steal personal data and money. Malicious actors also use manipulated video to erode trust in official information sources and to spread disinformation. Reliable automated detection of falsified video has therefore become critical to digital security.

Domestic research in this field strengthens Russia’s scientific sovereignty in AI security and supports workforce development in computer vision and media forensics. On the international stage, demand for improved detection algorithms continues to grow, giving the project broader relevance beyond national boundaries.

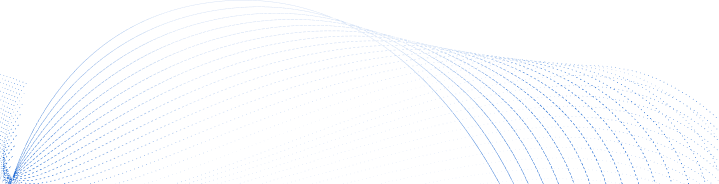

Building a Technical Foundation

The Russian system is designed to interoperate with global media forensics technologies by aligning with the international DFDC data standard. That compatibility creates a pathway toward export-oriented software products. The algorithms have wide practical applications. They can be integrated into social networks and video hosting platforms or embedded in video surveillance systems and specialized tools used by media organizations, corporate security departments and law enforcement agencies.

In general, the work lays the groundwork for high-precision content verification technologies capable of countering advanced digital synthesis methods. At the same time, researchers acknowledge that detection systems require continuous upgrades. Techniques for generating synthetic media are evolving quickly, forcing defensive tools to adapt at a similar pace.

This arms race is global. International studies highlight the need to reduce system vulnerabilities against new falsification strategies. Another priority involves maintaining algorithmic stability in real-world information environments, where video quality, compression and transmission artifacts can degrade detection performance.

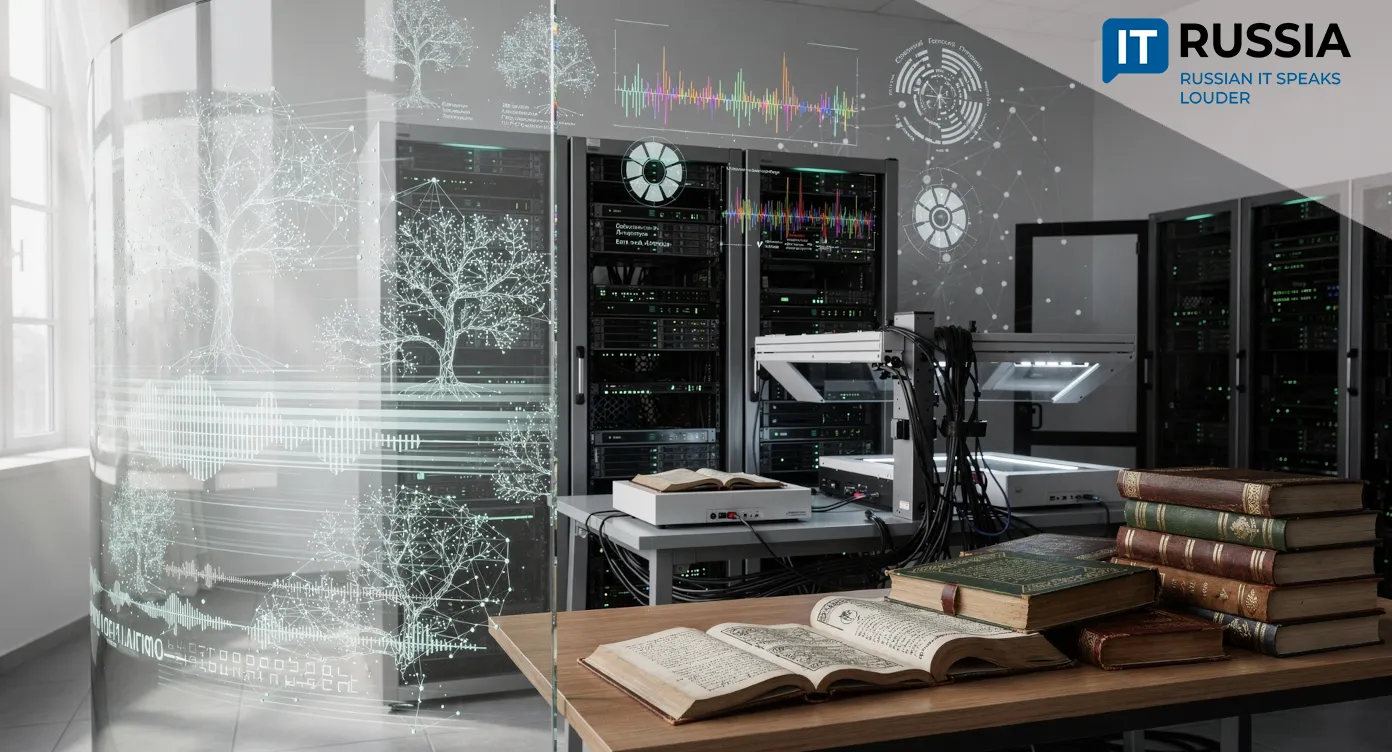

Global Research Context

Across the scientific community, researchers are experimenting with diverse neural network architectures to combat deepfakes. Many approaches analyze content to identify anomalies that signal manipulation. Techniques include comparing lip movements with corresponding audio tracks, evaluating acoustic parameters and detecting inconsistencies within image structures. One of the early detection models relied on identifying incompatible visual features inside a single frame, improving accuracy across multiple datasets.

Other teams have proposed methods that extract localized manipulation cues to improve algorithm generalization to previously unseen data. For example, the application of an ensemble CNN-Caps Net architecture enhances recognition of complex objects even when partially occluded or viewed from different angles and scales.

Research capacity within universities is also expanding in media analytics. A 2024 study conducted by AIRI – Nauchno-issledovatelskiy institut iskusstvennogo intellekta (Artificial Intelligence Research Institute) in collaboration with NIU VShE – Natsionalnyy issledovatelskiy universitet Vysshaya shkola ekonomiki (Higher School of Economics) examined methods to improve 3D object detection and recognition. Researchers experimentally confirmed the advantages of using smaller generative AI models trained on high-quality datasets to further train larger models and solve 3D detection tasks. According to the study “Na puti k generalizovannomu Deepfake Detection s pomoshchyu lokalnogo avtoenkodera” (Towards Generalized Deepfake Detection Using a Local Autoencoder), the LAE approach outperformed state-of-the-art benchmarks by 6.52 percent, 12.03 percent and 3.08 percent across three deepfake detection tasks in terms of generalization accuracy on previously unseen manipulations.

Toward a National AI Security Platform

The DGU initiative contributes to expanding domestic expertise in AI security. Researchers relied on internationally recognized datasets, allowing them to benchmark their system against global standards. The 88.5 percent accuracy rate defines a trajectory for further refinement. Developers acknowledge that additional optimization is required to reach maximum operational effectiveness.

Experts expect that within the next two years, the team will deepen its research and introduce pilot services for media verification. Over a three- to five-year horizon, the focus is expected to shift toward adaptive models capable of countering emerging synthesis techniques, alongside commercialization efforts. In the long term, the research could form the foundation of a national AI platform designed to automate monitoring of the information environment, reducing the risk of large-scale falsification campaigns.